The Question Analysis Report in OnTarget is your systematic tool for ensuring every test question is fair, accurate, and educationally sound. This validity review process helps you examine questions through multiple lenses to guarantee they measure what you intend them to measure.

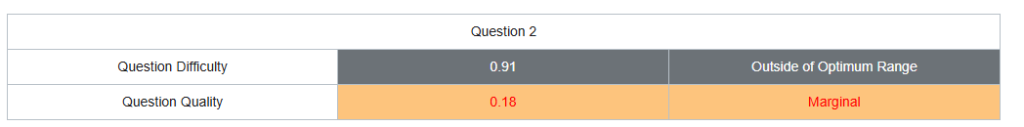

Understanding the Header Section

The top of your analysis shows key information about each question:

- Question Number: Identifies which specific item you’re reviewing

- Difficulty Level: Shows how challenging the question proved for students (values closer to 1.0 mean most students got it right)

- Quality Rating: Indicates overall question effectiveness, with flags like “Outside of Optimum Range” or “Marginal” alerting you to potential issues

When you see “Outside of Optimum Range,” it typically means the question was either too easy (most students got it right) or too difficult (few students answered correctly), which limits its usefulness for measuring student learning.

Statistical Flags and Recommendations

Item Revision Criteria

- P-Value Issues:

- If P > 0.90: Item too easy, consider increasing difficulty

- If P < 0.20: Item too difficult, review content alignment

- Point Biserial Issues:

- If rpb < 0.15: Poor discrimination, item may need revision

- If rpb < 0.00: Negative discrimination, item likely flawed

Implementation in OnTarget System

The Question Analysis Report uses these calculations to automatically flag items requiring review based on:

- Statistical Thresholds: Pre-set criteria for P-values and discrimination indices

- Quality Composite Scores: Weighted combinations of multiple statistics

- Bias Detection: Statistical analysis of differential item functioning across demographic groups

- Standards Alignment: Correlation analysis between item performance and learning objectives

These statistical measures ensure that assessment items meet TEA technical standards for validity, reliability, and fairness in educational measurement.

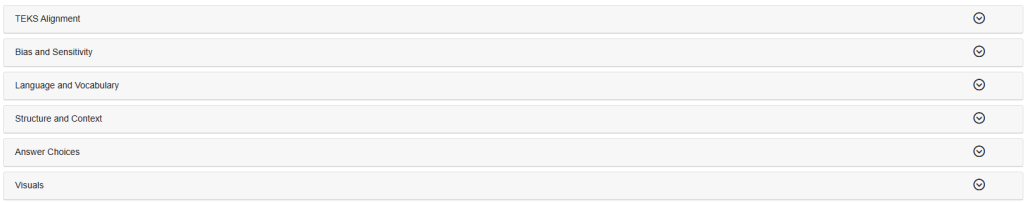

Standards Alignment – Does Your Question Measure What You Think?

This section verifies your question actually measures what it’s supposed to measure:

TEKS Alignment: Ensures your question connects to specific Texas Essential Knowledge and Skills standards Depth of Knowledge (DOK): Confirms the cognitive demand matches your learning objective

- DOK 1: Recall facts (identify, list, define)

- DOK 2: Apply skills/concepts (describe, compare, calculate)

- DOK 3: Strategic thinking (analyze, evaluate, justify)

- DOK 4: Extended thinking (synthesize, create, design)

Common Mismatch: Teaching students to “analyze” data but only asking them to “identify” facts creates a mismatch between instruction and assessment.

Bias and Sensitivity – Ensuring Fairness for All Students

This crucial section checks whether your question creates unfair advantages or disadvantages:

Cultural Bias: Avoid references that favor certain backgrounds. A math problem about skiing might disadvantage students who’ve never experienced snow sports.

Socioeconomic Sensitivity: Questions about expensive vacations or private tutoring may create barriers for students from different economic backgrounds.

Stereotypes and Assumptions: Don’t reinforce harmful assumptions about any group or assume all students share the same experiences.

Emotional Sensitivity: Avoid emotionally charged content unrelated to your learning objectives, such as references to divorce, death, or controversial political topics.

Language and Vocabulary – Clear Communication

This section ensures language doesn’t become a barrier to demonstrating knowledge:

Grade-Appropriate Vocabulary: Use words students at this level should reasonably know. Don’t use “precipitation” when “rain” would work for younger students.

Clear, Concise Writing: Eliminate unnecessary complexity. “The student conducted the experiment” is clearer than “The experiment was conducted by the student.”

Consistent Terminology: Don’t randomly switch between “rectangle” and “quadrilateral” within the same question.

Avoiding Wordiness: Remove ambiguous, vague, or irrelevant information that doesn’t contribute to measuring the learning objective.

Appropriate Academic Language: Use vocabulary that matches your grade level without creating unnecessary obstacles.

Structure and Context – Logical Organization

This section examines how well your question is organized and presented:

Clear Instructions: Students should immediately understand what they’re being asked to do.

Realistic Context: Scenarios should be believable and relevant to students’ experiences while supporting the learning objective.

No Unintended Clues: The question structure shouldn’t give away the answer. Making the correct answer noticeably longer than other choices is a common mistake.

Parallel Structure: All components should follow consistent formatting and organization.

Grade-Level Appropriate Context: The situation should be understandable and meaningful for your student population.

Answer Choices – Effective Multiple Choice Construction

For multiple-choice questions, this section ensures your options work effectively:

Plausible Distractors: Wrong answers should reflect common student misconceptions, not obviously silly choices like “purple elephant” that no student would reasonably select.

Content-Based Distractors: Base incorrect options on content students at this grade level are expected to know.

Avoid “Gotcha” Answers: Don’t trick students who actually understand the material with overly technical or misleading options.

One Clearly Correct Answer: Eliminate ambiguity about which choice is definitively right.

Complete Rationales: When required, provide thorough explanations for why answers are correct or incorrect.

Visuals – Supporting Student Understanding

This section evaluates any charts, graphs, images, or diagrams:

Purpose-Driven Graphics: Visual elements should directly support the question’s learning objective, not serve as decoration.

Complete Information: Everything students need to answer should be clearly provided in the visual.

Clarity and Legibility: Students must be able to easily read and interpret all visual elements, including labels, numbers, and symbols.

Accessibility: Graphics should work for students with different visual processing abilities and learning needs.

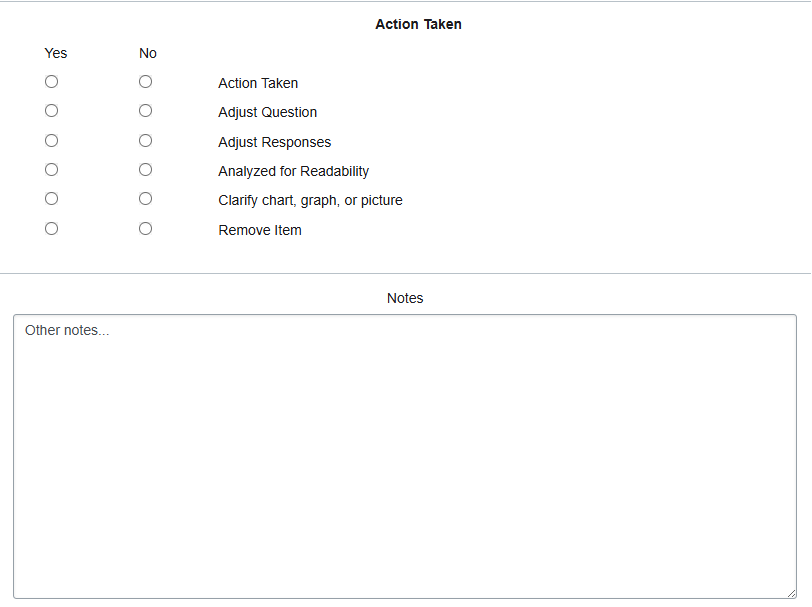

Action Tracking – Documenting Your Decisions

The right panel helps you document and track your review process:

- Action Taken: Record what you decided about each question

- Adjustments Made: Note any modifications to questions or answer choices

- Quality Improvements: Document analysis for readability and visual clarity

- Item Management: Track decisions about keeping, revising, or removing questions

- Notes: Add observations and reminders for future reference

Why This Process Matters

This systematic review prevents a much larger problem: making instructional decisions based on invalid assessment data. Without proper validity review, you might think a student doesn’t understand fractions when they actually just struggled with complex vocabulary, or assume they lack science knowledge when cultural references created the barrier.

Getting Started: Begin with your most important assessments (unit tests, benchmarks) and gradually work through your question bank. While this process requires initial time investment, it builds a collection of high-quality, validated questions that will serve your students well.

Building Quality Over Time: Each question you validate becomes part of a reliable assessment toolkit, ultimately saving time and providing more accurate information about student learning.

This validity review process ensures your assessments truly measure student learning rather than unrelated factors, giving you confidence in the educational decisions you make based on your results.