The Question Analysis Report is a comprehensive evaluation interface within OnTarget that provides systematic review and quality assurance for assessment items. This multi-section analysis tool ensures educational content meets technical standards and aligns with Texas Essential Knowledge and Skills (TEKS) requirements.

Header Section

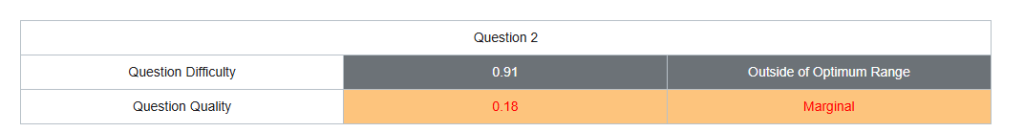

- Question Identification: Displays the specific question number being analyzed

- Difficulty Metrics: Shows statistical difficulty value (0.91 in example)

- Quality Assessment: Displays quality score (0.18 in example) with status indicators (“Outside of Optimum Range” and “Marginal” classifications)

P-Value (Item Difficulty) Calculations

Formula

P-Value = (Number of students answering correctly) / (Total number of students)Interpretation Scale

- P = 0.00 to 0.30: Very difficult items

- P = 0.31 to 0.70: Moderate difficulty (optimal range)

- P = 0.71 to 1.00: Easy items

Example from Screenshot

The displayed difficulty value of 0.91 indicates:

P = 0.91 = 91% of students answered correctlyThis places the item in the “easy” category, explaining why it’s marked as “Outside of Optimum Range.”

Point Biserial Correlation (rpb)

Formula

rpb = (M₁ - M₀) / St × √(p × q)Where:

- M₁ = Mean total score of students who answered the item correctly

- M₀ = Mean total score of students who answered the item incorrectly

- St = Standard deviation of total test scores

- p = Proportion answering correctly (P-value)

- q = Proportion answering incorrectly (1 – p)

Alternative Computational Formula

rpb = (∑XY - (∑X)(∑Y)/N) / √[(∑X² - (∑X)²/N)(∑Y² - (∑Y)²/N)]Where:

- X = Item scores (1 for correct, 0 for incorrect)

- Y = Total test scores

- N = Number of students

Discrimination Index Calculations

Upper-Lower 27% Method

D = (PU - PL)Where:

- PU = Proportion of upper 27% group answering correctly

- PL = Proportion of lower 27% group answering correctly

Interpretation Guidelines

- D ≥ 0.40: Excellent discrimination

- D = 0.30-0.39: Good discrimination

- D = 0.20-0.29: Fair discrimination

- D < 0.20: Poor discrimination (review needed)

Quality Score Calculation

Composite Quality Formula

Quality Score = w₁(rpb) + w₂(1-|P-0.5|) + w₃(Distractor_Effectiveness)Where weights (w) are predetermined based on assessment priorities.

Example Analysis

Given the screenshot shows Quality = 0.18 marked as “Marginal”:

P-Value = 0.91 (too easy)

Estimated rpb ≈ 0.15-0.25 (likely low due to ceiling effect)

Quality Score = 0.18 (below acceptable threshold)Statistical Flags and Recommendations

Item Revision Criteria

- P-Value Issues:

- If P > 0.90: Item too easy, consider increasing difficulty

- If P < 0.20: Item too difficult, review content alignment

- Point Biserial Issues:

- If rpb < 0.15: Poor discrimination, item may need revision

- If rpb < 0.00: Negative discrimination, item likely flawed

- Combined Analysis:

Item_Flag = (P < 0.2 OR P > 0.9) AND rpb < 0.15

Distractor Analysis Formula

Distractor Point Biserial

rpb(distractor) = (M₀ - M₁) / St × √(p × q)Effective distractors should have:

- Negative point biserial correlations

- Attraction from lower-ability students

- rpb values between -0.10 to -0.30

Reliability Impact Calculation

Alpha-if-Item-Deleted

αnew = (k-1)/k × [1 - (∑Si² - Sj²)/(St² - Sj²)]Where:

- k = Number of items

- Si² = Variance of item i

- Sj² = Variance of item being deleted

- St² = Variance of total scores

Implementation in OnTarget System

The Question Analysis Report uses these calculations to automatically flag items requiring review based on:

- Statistical Thresholds: Pre-set criteria for P-values and discrimination indices

- Quality Composite Scores: Weighted combinations of multiple statistics

- Bias Detection: Statistical analysis of differential item functioning across demographic groups

- Standards Alignment: Correlation analysis between item performance and learning objectives

These statistical measures ensure that assessment items meet TEA technical standards for validity, reliability, and fairness in educational measurement.

Evidence of Validity

This is a validity review checklist used to systematically evaluate test questions to ensure they’re fair, accurate, and appropriately constructed. Here’s what each section helps you examine:

Standards Alignment

- Verifies the question actually measures what it’s supposed to measure

- Ensures it aligns with specific learning standards or objectives

- Confirms the question matches the intended grade level and content area

- State Standards: Does it match your curriculum requirements?

- Depth of Knowledge (DOK): Does the cognitive demand match your learning objective?

- DOK 1: Recall facts (identify, list)

- DOK 2: Apply skills/concepts (describe, compare)

- DOK 3: Strategic thinking (analyze, evaluate)

- DOK 4: Extended thinking (synthesize, create)

Example issue: Teaching students to “analyze” but asking them to only “identify” creates a mismatch.

Bias and Sensitivity

- Checks for cultural, gender, socioeconomic, or other biases

- Ensures the question doesn’t favor certain groups of students

- Reviews for potentially offensive or insensitive content

- Looks for assumptions about student backgrounds or experiences

This protects all students from unfair disadvantages:

- Cultural bias: Avoid references that favor certain backgrounds (e.g., assuming all students know about skiing)

- Stereotypes: Don’t reinforce harmful assumptions about groups

- Sensitive topics: Avoid emotionally charged content unrelated to learning objectives

Example issue: A math word problem about expensive vacations may disadvantage students from low-income families.

Language and Vocabulary

- Evaluates whether the reading level is appropriate

- Checks for unnecessarily complex or confusing language

- Ensures vocabulary doesn’t create barriers unrelated to the content being tested

- Reviews for clear, concise wording

This ensures language doesn’t become a barrier to demonstrating knowledge:

- Grade-appropriate vocabulary: Use words students at this level should know

- Clear, concise writing: Eliminate unnecessary complexity

- Consistent terminology: Don’t switch between “rectangle” and “quadrilateral” randomly

- Active voice: “Students conducted the experiment” vs. “The experiment was conducted by students”

Example issue: Using “precipitation” instead of “rain” in a 2nd-grade science question.

Structure and Context

- Examines the overall organization and flow of the question

- Checks if the context/scenario is realistic and relevant

- Ensures the question format supports the learning objective

- Reviews for logical progression of information

This ensures the question format supports learning assessment:

- Clear instructions: Students understand what they’re being asked to do

- Appropriate context: Scenarios are realistic and relevant

- No unintended clues: The structure doesn’t give away the answer

- Parallel structure: All answer choices follow the same format

Example issue: Making the correct answer noticeably longer than the distractors.

Answer Choices (for multiple choice questions)

- Evaluates whether distractors are plausible but clearly incorrect

- Checks that the correct answer is definitively right

- Ensures choices are similar in length and complexity

- Reviews for “all of the above” or “none of the above” issues

This ensures multiple-choice questions work effectively:

- Plausible distractors: Wrong answers should reflect common misconceptions

- Grade-level appropriate: Distractors based on content students should know

- Avoid “gotcha” answers: Don’t trick students who actually know the material

- One clearly correct answer: No ambiguity about which choice is right

Example issue: Having distractors like “purple elephant” that no student would reasonably choose.

Visuals

- Checks charts, graphs, images, or diagrams for clarity

- Ensures visuals support rather than confuse the question

- Reviews for appropriate size, resolution, and labeling

- Confirms visuals are accessible to all students

This ensures graphics support rather than hinder assessment:

- Purpose-driven: Charts/images directly support the question’s objective

- Complete information: Everything needed to answer is provided

- Clear and legible: Students can easily read and interpret visuals

- Accessible: Works for students with visual processing differences

Example issue: Including a blurry graph where students can’t read the numbers on the axes.

How to Use This Checklist

- Review each question systematically through all six lenses

- Mark “No” for any area that needs improvement

- Revise the question before using it with students

- Document your changes for future reference

- Build a bank of validated questions over time

This process might seem time-consuming initially, but it prevents the much larger problem of making instructional decisions based on invalid assessment data. Start with the most important assessments (unit tests, benchmarks) and gradually work through each question bank.

Here’s why this matters so much

Analyzing assessments for validity is critical for ensuring tests actually do what they’re supposed to do.

Validity ensures the assessment actually measures the intended learning objectives, not unrelated factors like reading ability when testing math concepts, or cultural knowledge when testing science skills. Without validity, one might think a student doesn’t understand fractions when they actually just struggled with complex vocabulary.