What is Item Analysis?

Item Analysis is like a report card for your test questions. Just as you evaluate your students’ performance, this tool evaluates how well each question on your test is working. It helps you identify which questions are doing their job effectively and which ones might need some attention.

The Two Key Measurements

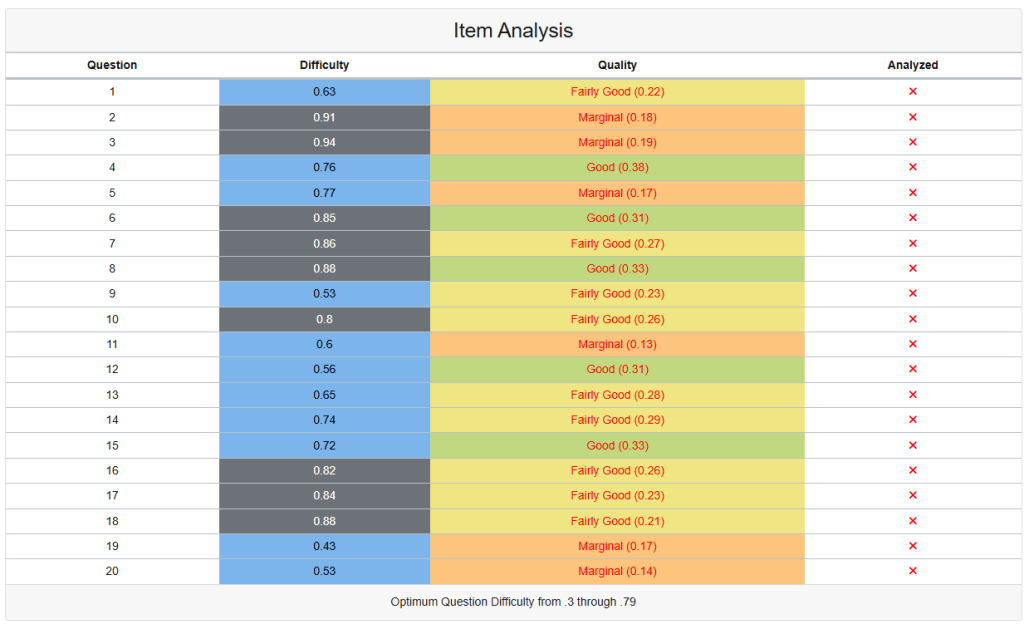

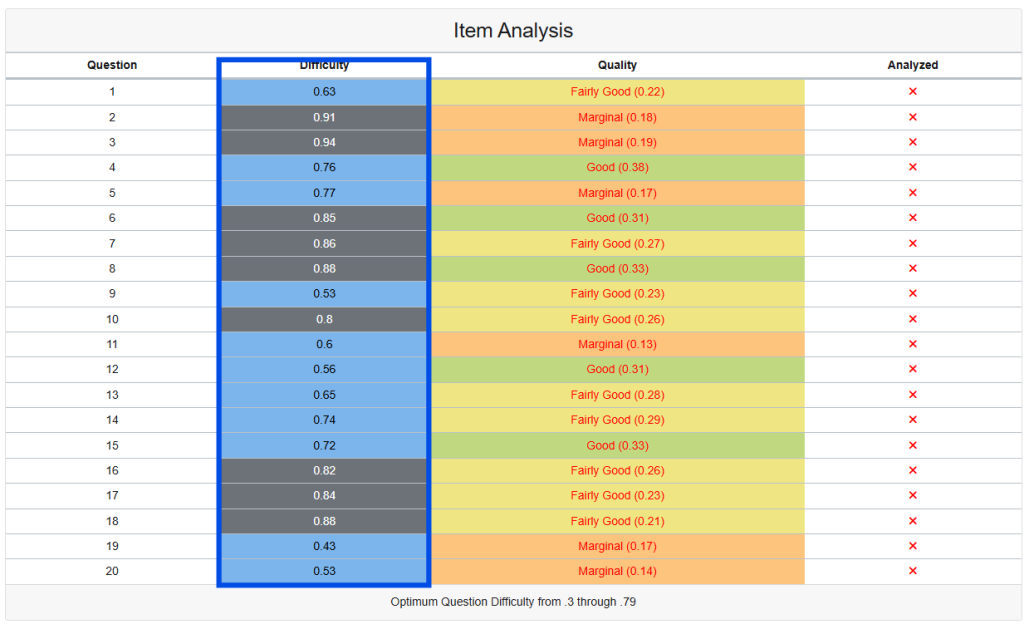

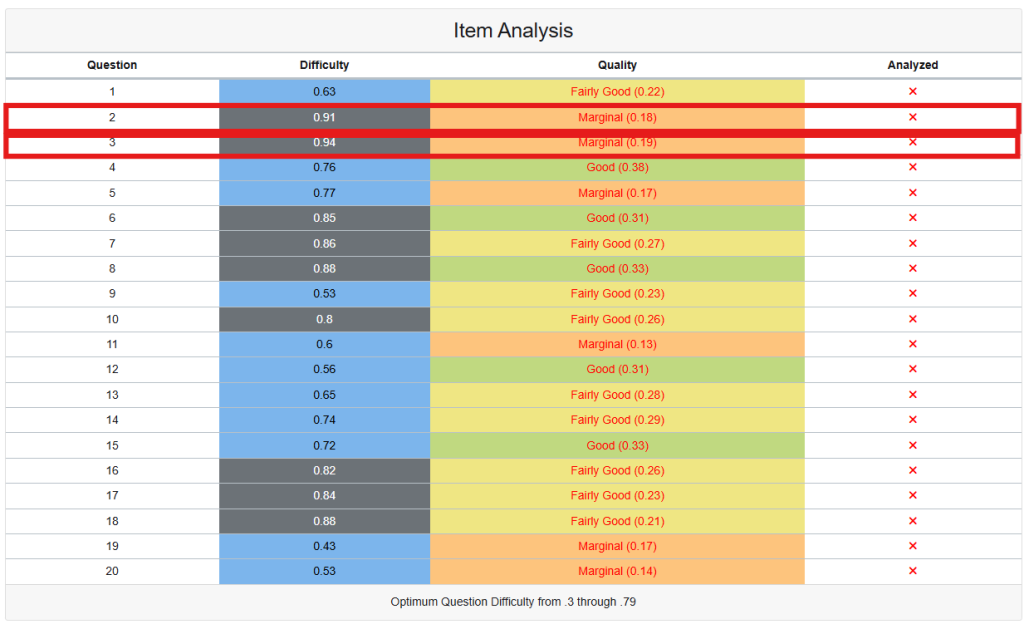

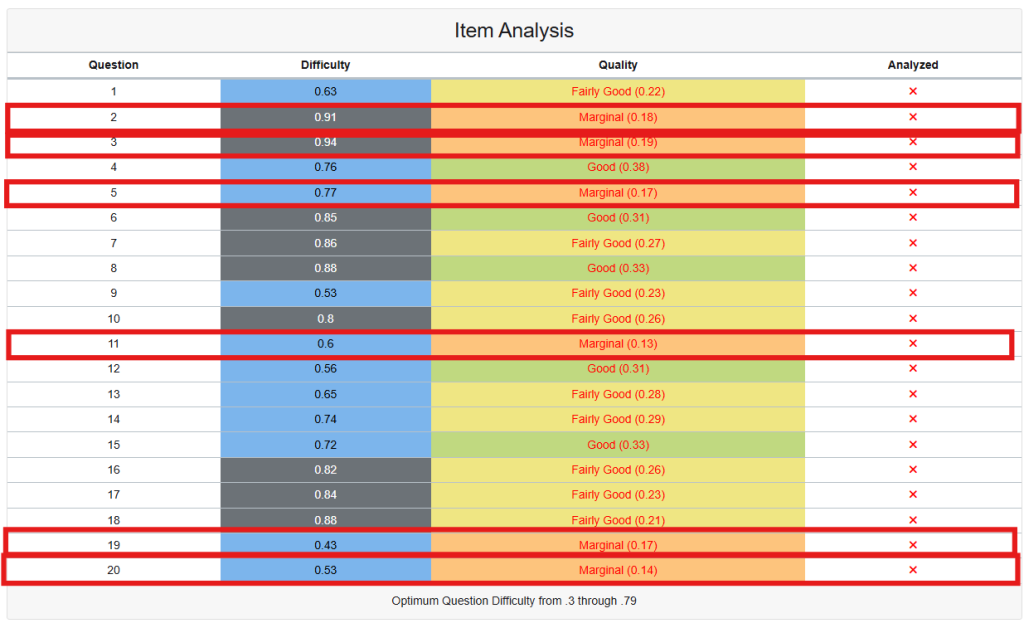

Question Difficulty (P-Value)

Think of this as asking: “How many students got this question right?” The number you see represents the percentage of students who answered correctly, shown as a decimal.

What the Numbers Mean:

- 0.90 and above: Almost everyone got it right (very easy)

- 0.30 to 0.79: Just right – some students get it, others don’t (ideal range)

- Below 0.30: Very few students got it right (very difficult)

Looking at Your Results:

- Questions 2 and 3 were answered correctly by over 90% of students – these might be too easy

- Questions 19 and 20 were more challenging, with about half the students getting them right

- Most of your questions fell in the middle range, which is good

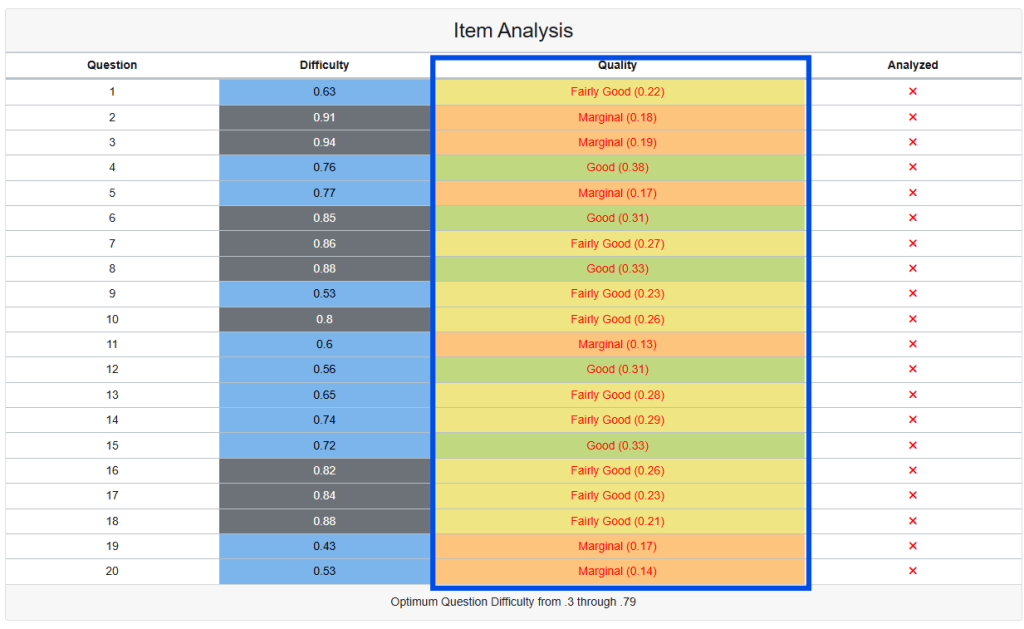

Question Quality (Point Biserial Correlation)

This measures whether your question is a “good test question.” Specifically, it asks: “Do students who performed well on the overall test also tend to get this individual question right?” A good test question should separate strong students from weaker students.

What the Colors Mean:

- Green (Good): This question effectively distinguishes between strong and weak students

- Yellow (Fairly Good): The question works reasonably well but could be improved

- Orange (Marginal): The question isn’t doing a great job of separating students by ability

- Red (Poor): This question should be reviewed or removed

What This Means for Your Teaching

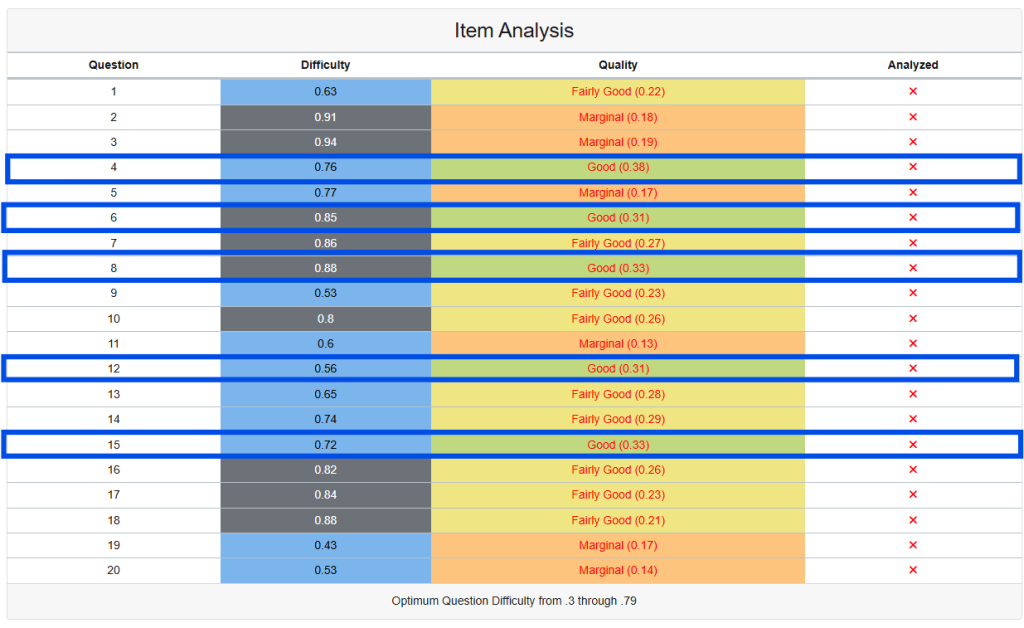

Questions That Are Working Well

Questions 4, 6, 8, 12, and 15 are your “gold standard” questions. They:

- Have appropriate difficulty levels

- Effectively separate students who understand the material from those who don’t

- Should be kept as-is for future tests

Questions That Need Attention

Too Easy (Questions 2 & 3):

- Nearly all students got these right

- They’re not helping you identify who truly understands the material

- Consider making them more challenging or adding more complex answer choices

Poor Discrimination (Questions 2, 3, 5, 11, 19, 20):

- These questions aren’t effectively separating strong students from struggling students

- This could mean:

- The question is confusing or poorly worded

- The wrong answers aren’t believable enough

- The question doesn’t align well with what you’re really trying to measure

Practical Steps for Improvement

Before Your Next Test:

- Review marginal questions (orange): Look for unclear wording, confusing grammar, or unrealistic answer choices

- Examine very easy questions: Consider whether they’re testing important concepts or just recall of simple facts

- Check alignment: Ensure questions match your learning objectives and instruction

During Test Review:

- Pay special attention to questions with poor discrimination when going over answers with students

- Ask students what made certain questions confusing or tricky

- Note patterns in incorrect answers to improve future versions

For Future Assessments:

- Keep your green questions – they’re working well

- Revise yellow questions – small improvements can make them more effective

- Seriously consider replacing orange questions – they may not be serving their purpose

Why This Matters

Good test questions do more than just assign grades – they:

- Help you accurately identify which students need additional support

- Provide reliable feedback about your instruction

- Build student confidence by being fair and clear

- Save you time by reducing disputes about “tricky” questions

Remember, even experienced educators regularly review and improve their assessment items. This analysis gives you objective data to make your tests more effective tools for both teaching and learning.

The Bottom Line

Your item analysis shows that most of your questions are working well, with several standout performers. Focus your revision efforts on the few questions that aren’t effectively measuring student understanding, and you’ll have a stronger assessment that better serves both you and your students.